Introduction

Taking a systematical approach to improving our casual game is important as to not lose sight of the bigger picture and get caught up polishing some minor feature that is considered unimportant by users.

Let's start off with recapping our current work and summarize our findings so far. We will then look at the important aspects of our game, and determine the current state of our game. Finally we look at the road map that we have planned for our game.This should create a solid foundation on which to polish our game even further!

So what have we done so far. We developed a minimum viable product, and did two evaluations of it. In between these evaluations we added some additional content, mainly improving the core game mechanics.

First evaluation

In the first evaluation we explored the possibilities of the touch-screen controls, focusing on determining a "perfect" control scheme. This evaluation was done with a small set of fellow students.

At this point we decided to test two tapping control schemes, one where a left tap moved the dinosaur up, and a right tap moved it a lane down, and its inverse.

Our conclusion was that left tap - up, right tap - down was most natural. Furthermore people suggested swiping and clicking on lanes to switch as methods for controlling your top-hat dinosaur.

At this point we were somewhat convinced that we were on a good path with the control schemes, however due to the small number of participants we deemed it necessary to substantiate these conclusions with a bigger test, involving more participants.

Second evaluation

In the second evaluation we got a total of 22 people to answer a questionnaire about our control schemes, we also added a third tapping control scheme, with a tap on the top of the screen moving the dinosaur up, and a tap on the bottom side of the screen moving the dinosaur down.

We expected that the results would collaborate our first conclusions, however, this was not necessarily the case. While people did seem to have a small preference for the control scheme put forward in the first evaluation, they did not fully dismiss the other control schemes.

Furthermore a lot of people again considered swiping, and instant lane switching as possible options for other control schemes.

At this point we decided to keep all three control schemes in the game. We will keep the other possibilities in mind, while we first focus on other aspects of our game. If time permits we will do another evaluation, which will have these other two suggested control schemes included.

Furthermore during the first evaluation, we noticed that people found several game mechanics somewhat confusing. At the beginning we assumed that with the principle of learning by dying people would understand quickly enough what to do. This however was not necessarily the case, whether this was due to the game not having enough visual cues to what was happening, or this being a bad assumption is not yet explored. We decided to add a small tutorial screen to the game to improve the learnability, which was evaluated during the second evaluation. We concluded however that this did not minimize the learnability completely, and room for improvement still exists. At this moment players found both the scoring and the dodging of obstacles confusing. Further improvements and evaluations will help reduce this problem.

Simple breakdown of casual games.

Before we move to a proper analysis of both the current and future situation, let's first break down our game into several bite-sized chunks that provide us with handles to evaluate the different parts.

We'll divide the game in three important parts.

Core game mechanics.

| Core Game-mechanics |

| Rules |

Schedules |

Tokens |

Rules describe the rules of the game. In our case these would be :

- If you eat a small dino you increase your score.

- If you run into a big dino you die.

- If you run into an obstacle you die.

- You can only move to adjacent lanes.

Schedules describe when events happen

- After x time the game speeds up

- After x time the multiplier increases.

Tokens describe points, in-game currency etc.

- The score

- The amount of eaten small dinosaurs.

Interaction Mechanics

| Interaction Mechanics |

| Control Schemes |

Interaction |

Menu navigation |

Control schemes describe the static control schemes of input.

the touch screen devices:

- left - up, right - down

- left - down, right - up

- top - up, bottom - down

Keyboard devices:

- 'w' - up, 'a' - down

- 'up' - up, 'down' - down

Interaction describes how users use the control schemes.

Aesthetics

| Aesthetics |

| Visual Aesthetics |

Sound |

| Menus |

Game-Mechanics |

Music |

Interaction |

Aesthetics describe the look and feel of a game.

Visually includes the menu and the representation of the current game mechanics. Sound is the background music, and the sound associated with interaction.

We can evaluate different aspects of these three major subsets according to the criteria.

Current State

Given this breakdown into three areas, what is the current state of this project:

So far we have evaluated the interaction mechanics of our game on touch-screen devices.

We have also evaluated the learnability of our game-mechanics, and found several problem areas that could benefit from improvement.

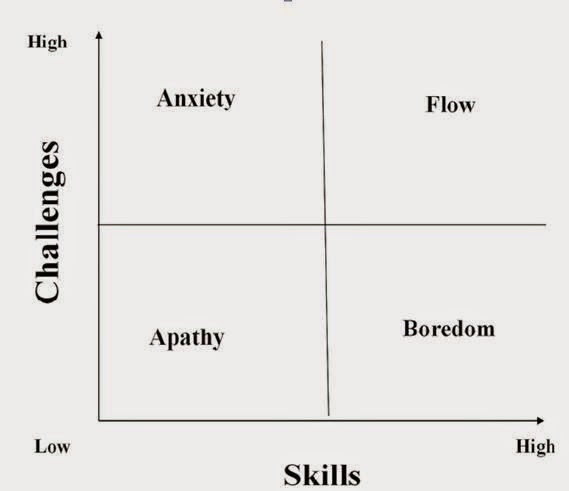

Now you could ask "Why would you evaluate the learnability before evaluating if people actually like your game, and its game mechanics?"

The answer has an element of the chicken and the egg in it. On the one hand the game-mechanics need to be fun before you spend time on improving the learnability, but on the other hand can game-mechanics truly be fun if they are extremely difficult to learn?

We chose to optimize the learnability first due to several reasons.

- We noticed during the first evaluation that people seemed to enjoy playing the game, and were neither bored nor extremely annoyed. Playing the game ourselves, we came to the same conclusion, the game-mechanics are enjoyable.

- Casual games have a small retention rate. If gamers do not understand the goals of a game, they will most likely just install a different game, instead of trying to understand the goals designed by the developers. So it is important to minimize the learnability.

- Having people understand the mechanics allows them to give better feedback on the actual (intended) game-mechanics.

Of course opting for this strategy has drawbacks. Most importantly you could theoretically waste resources by creating, optimizing and evaluating the learnability of a game-mechanic, that later on in the development process gets canned, when the actual game mechanics are evaluated.

However when a game mechanic really takes too long to improve, or users find it hard to understand, it needs to be evaluated whether the game-mechanic in itself is working. Thus opting for this strategy also acts as a preliminary evaluation to explore which game-mechanics work, and which do not.

So far we have found that the learnability of the game could be decreased. The areas that were mentioned most often by users, were the multiplier and the dodging of obstacles.

Rough roadmap - Future evaluations and plans.

Our first aim is to have our casual game released 'in the wild', which will mean releasing a web-browser and android version of our games, and spreading the word about this release on social media. We will not release an iOS version, due to the notorious slow process it takes to actually get an app released on the app store.

Before we can do this release we want to incorporate some form of analytics. At this moment we are looking at the google play game services, which should allow us to track different kind of statistics when people play our game.

Furthermore we wish to work on several of the problems that surfaced in our first learnability evaluation. We identified the main problem to be the scoring and multiplier, and the dodging of obstacles and big dinosaurs. We plan to solve this by improving and possibly redesigning the tutorial. We will also add several visual indicators to the scoring and multiplier. These improvements would need to be evaluated by user testing, once implemented.

Possible longer term areas that could be improved and/or evaluated are (in no particular order):

- Evaluation and tweaking of the core game mechanics.

- Experimentation with non-tap game controls on touch-screen devices. These could be for example swiping and instant lane switching.

- Several proper standardized questionnaires related to the current state of our game.

For future longer term plans, we would like to add evolution/growing as a game-mechanic. We also hope to be able to improve the aesthetics of the game. This would include animations for the different dinosaurs, and a redesign of the looks of the lanes and background of the game.

Of course these plans are only a rough road map, if we encounter any pressing problems these will get priority.

TL;DR:

So far we have done two evaluations in which we explored the controls of touch-screen devices and the learnability of our current game.

At this point we decided to incorporate three different control scheme lay-outs, and we will experiment more with other schemes as well.

We learned that the learnability of our game could be decreased. We plan on doing this by adding visual elements to scoring, and improving the tutorial.

In the future we would like to evaluate the core game-mechanics, do more testing related to the controls and do several standardized tests to see how our game performs.

.png)

.png)

.png)

.png)

.png)

.png)

.png)